Some thoughts on AI, DeepSeek and its implications for Energy

The history of a technology is a history of its decentralisation

The history of a technology is a history of its decentralisation. A new technology emerges that requires large economies of scale and has high costs. Over time, people innovate in the technology, causing costs to reduce and enabling smaller and smaller versions to emerge and proliferate. That doesn’t mean large centralised versions of the technology disappear, they simply adopt the innovation alongside their scale, becoming even more powerful. But the same, and new, use cases are unlocked at smaller levels.

The most prominent example in recent history is computers. We started with mainframe computers, then Moore’s law and advances in chip technology moved us into PCs and eventually into mobile.

AI is on the same trajectory as any other technology. The recent DeepSeek release has accelerated the timeline.

The era of centralised AI

To date, AI has required massive amounts of compute and energy to build. This created large barriers to entry, huge investments ($500bn project Stargate anyone?), and vast quantities of energy to run. Grid connections, along with acquiring compute, were the bottlenecks for these models. To overcome the grid bottleneck, many hyperscalers are exploring on-site generation with microgrids. Scale Microgirds, Stripe and Paces recently published a white paper on what this could look like.

Enter DeepSeek

DeepSeek’s biggest impact is not that it was cheap to build, or that it came from China. It was that it introduced a new architecture for building AI with limited compute. Whether you believe they had access to the latest H100 GPUs or not, they were still at least somewhat resource-constrained and had to come up with a new approach. So far, hyperscalers have just chucked more and more compute at the problem to build better AIs. DeepSeek didn’t have that luxury.

DeepSeek doesn’t mean the end of centralised AI. Hyperscalers will adopt the new approach and still keep chucking more compute at the problem. They’ll get better and better, but will be reserved for the most intense use cases. Just like - despite everyone having a phone and laptop - mainframe computers still exist in the form of supercomputers that we use to crunch massive problems.

What DeepSeek has done is accelerated the timeline to decentralised AI. Before, we would have had to wait until compute got so efficient that today’s largest models could run on smaller infrastructure. Now, the required improvements in efficiency are far less; and potentially even unnecessary for some use cases.

The near-term future

What we’re seeing with DeepSeek, and will start to see with other models as they adopt their approach, is the ability to run the model locally - directly on your own computer - or in a private cloud instance. This has various privacy benefits that enterprises value. It could also reduce latency depending on the relationship between the speed of data transmission and local compute power. As with everything, there are tradeoffs, and the beefier centralised models will be used in many cases as well.

Note: this is why the DeepSeek news is actually good for Amazon and Microsoft, and potentially chip manufacturers such as AMD. More AWS/Azure instances will be set up to run hosted models; and cheaper, non-NVIDIA chips could run them.

The long-term future

As further efficiencies and innovations are made in compute and AI architecture, sufficiently powerful AIs will run on smaller and smaller scales. Like mainframes became PCs became mobile phones, over time the same level of output can be achieved on smaller devices. This will lead to a proliferation of edge compute as AI becomes embedded in everyday devices and new form factors. The precise steps in this process will be determined by performance capabilities and use cases and whether the benefits of privacy, latency and other improvements from embedded AI outweigh the cons of size, scale and cost. For example, if an AI requires a room worth of compute and other infrastructure, but only plays Go, most people will just connect to it via the internet. But if it’s pocket-sized and by being local to the device, the AI performs better, then maybe people will buy it. Alternatively, if there’s an AI that optimises a factory throughput, fits in a shipping container, and offers the benefit of privacy, well a lot of factories would install that.

What’s all this got to do with Energy?

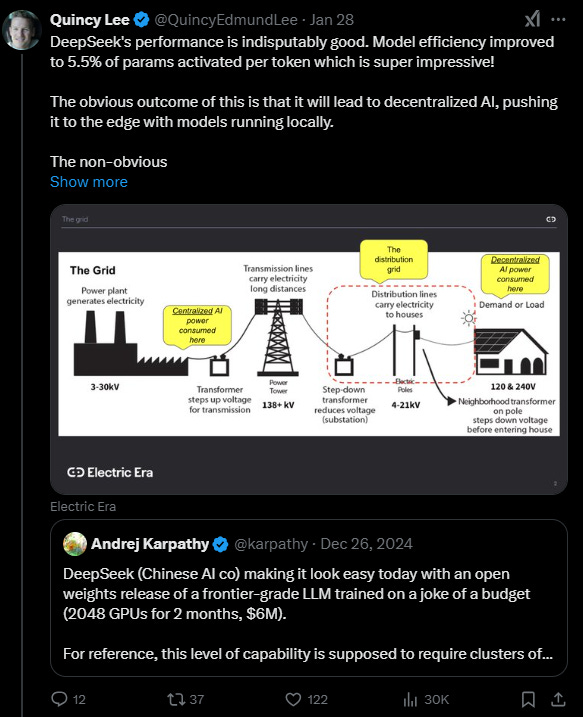

Quincy Lee made an excellent point about decentralised AI and edge compute. If we all start having assistants and massive amounts of compute in our homes, offices and factories, then our energy consumption in these locations will also increase.

This puts further pressure on a distribution grid that is already struggling to adapt to DERs such as batteries, EVs, solar panels and heat pumps. Just as centralised AI and the hyperscalers are struggling to get interconnections for their new datacenters, homes and offices could struggle to get interconnections for their AIs unless they also install technologies that eliminate the need to upgrade the distribution grid; such as batteries to smooth out the load.

Marginal cost of generation and compute go to zero

An adjacent trend to what I’ve discussed so far is that, just as we see efficiency gains in compute that mean marginal costs are going to zero, we are seeing efficiency gains in the cost of energy generation that mean marginal costs are going to zero.

The result of this is that the centralised AI hyperscalers - that run compute remotely and send the results back - will massively overbuild compute alongside energy generation. It will then be cheaper to switch the location of where the compute occurs, than to pay the higher energy costs to continue to run the model in the same location. For example, openAI may have a datacenter in Texas and one in India. When it is daytime in Texas and nighttime in India, it may be cheaper to run a model request in Texas where the sun is shining and LMP approaches $0, than in India; even if the request is from India.

Caveats: There are a whole host of other costs in this; plus the India location may have batteries which also mean the LMP there could approach $0, but this would require extra capex spend in setting up the site which may not be worth it. Lots of calculations and modelling would be required to determine the best approach for any two locations and LMPs

What are the implications for utilities?

Finishing this all up - the implications of AI for utilities are uncertain with lots at stake. Many are waiting for more certainty (never a good idea if you want to be in the lead). Some people who have waited before building the infrastructure that supports hyperscaler datacenters may be relieved by the DeepSeek news as they think it will reduce their power needs. I disagree, the efficiency gains just mean we’ll be even more efficient at using the maximum amount of compute we can possibly get.

Near-term:

They will miss out on load growth and the associated revenue, if they don’t give centralised AI interconnection to build their datacenters. Datacenters will go where they can connect, or build it themselves. We may also see local governments putting pressure on utilities to fix the grid so hyperscalers locate in their jurisdiction.

Long-term:

They will need to scale up the distribution grid so the same thing doesn’t happen. If they fail to do this, energy will be the bottleneck to distributed AI and we will have to wait for further efficiency gains, and therefore a reduction in energy consumption that means the grid can support it, before we see it.

They will need to provide Locational-Marginal Pricing (LMP) so AI companies know where the cheapest energy prices are and can shift compute there. Again, this could be a condition for a datacenter to site in their service area.